I've made a program that converts a normal map texture (DirectX DDS supported directly) into a height map image (PNG)

Here is a high-level overview of my algorithm:

- Loading the Normal Map: The normal map image is loaded and converted to a floating-point representation in the range [-1, 1].

- Preprocessing the Normal Map: The normal map is tiled into a larger map to handle boundary conditions more effectively during the processing.

- Defining Subregions: The larger normal map is divided into smaller subregions to allow for parallel processing.

- Computing Height Maps for Subregions: Each subregion's height map is computed using Poisson integration, which involves solving a large sparse linear system.

What is the Poisson Equation?

The Poisson equation is a fundamental equation in physics and engineering, often used to describe the potential field caused by a given charge or mass density distribution. In the context of image processing, it's used to reconstruct a surface from gradient information.

In our case, the Poisson equation helps to convert the normal map into a height map by solving for the height values at each pixel based on the gradients.

The Equation

The Poisson equation in two dimensions can be written as:∂²h/∂x² + ∂²h/∂y² = f(x, y)

Here:- h is the height function we want to find.

- f(x, y) is a function representing the known gradients.

Discretizing the Poisson Equation

To solve this on a computer, we convert the continuous equation into a discrete form using finite differences. This involves approximating the second derivatives with differences between neighboring pixel values.

For a pixel at position (i, j):(h(i+1, j) - 2h(i, j) + h(i-1, j))/Δx² + (h(i, j+1) - 2h(i, j) + h(i, j-1))/Δy² = f(i, j)

Assuming uniform grid spacing (Δx = Δy = 1 for simplicity):h(i+1, j) + h(i-1, j) + h(i, j+1) + h(i, j-1) - 4*h(i, j) = f(i, j)

This forms a system of linear equations where each pixel’s height value depends on its neighbors.

Matrix Representation

We represent this system as a matrix equation:A * h = b

Here:- A is a sparse matrix encoding the relationships between neighboring pixels.

- h is the vector of unknown height values.

- b is the vector derived from the gradient information f(x, y).

Solving the System

To find the height map, we solve the linear system:A * h = b

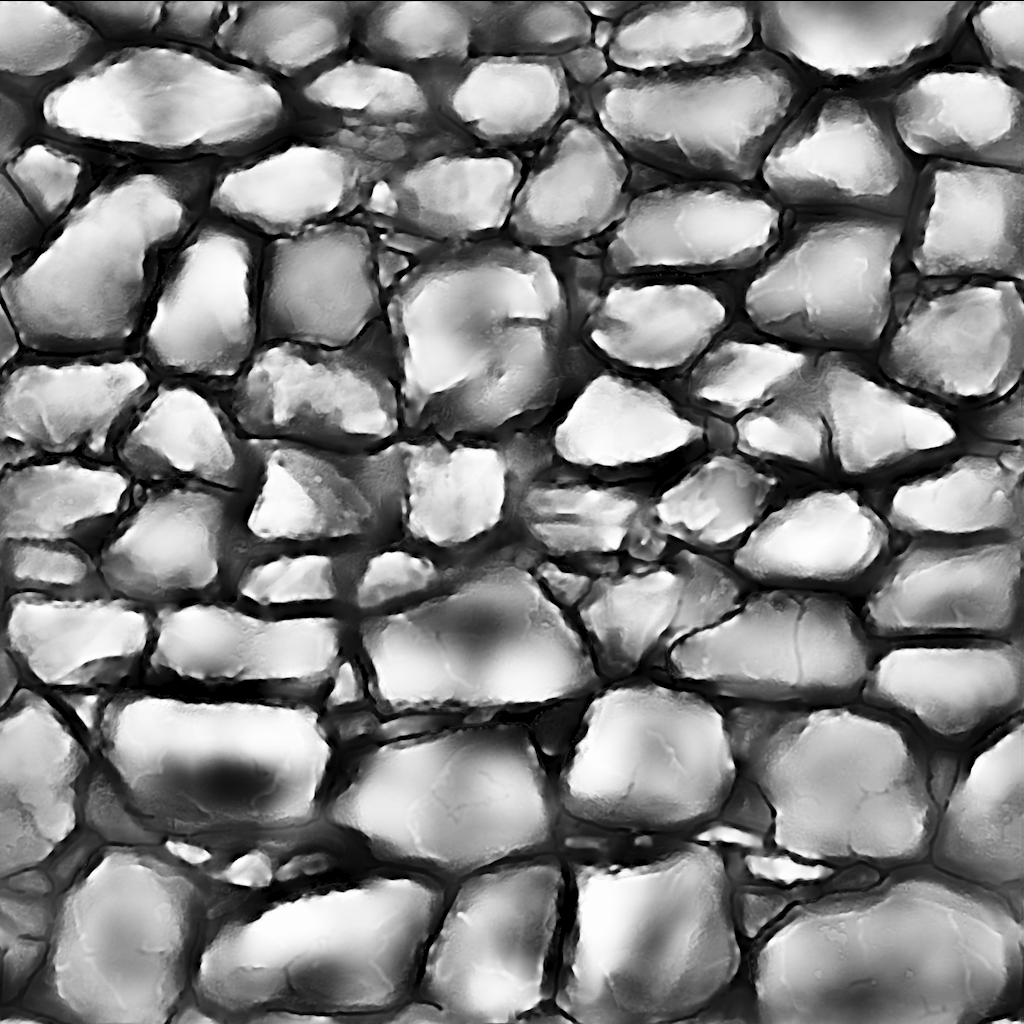

using numerical methods. This gives us the height values at each pixel, which we then reshape into the height map. - Combining Subregion Height Maps: The height maps from all subregions are combined into a single height map using a weighted blending technique. I use cosine smoothing in this version which allows me to minimize the boundary effect between subregions. Previously, I've used gaussian bluring and 50% overlap, but this method gives better results - you can see the difference between non-FFT and FFT version (less repeated boundary artifacts)

- Enhancing High-Frequency Details: High-frequency details are enhanced using multi-scale unsharp masking to improve the visual quality of the height map.

- Clipping Histogram Levels: The histogram levels of the height map are adjusted to improve contrast.

- S-Curve Histogram Adjustment: An S-curve adjustment is applied to smooth the height map

- Removing Spikes: Spikes in the height map are removed using a combination of median filtering and Gaussian blurring.

- Saving the Height Map: The final height map is saved as an image file.

Is this algorithm better than Adobe Normal-To-Height HQ?

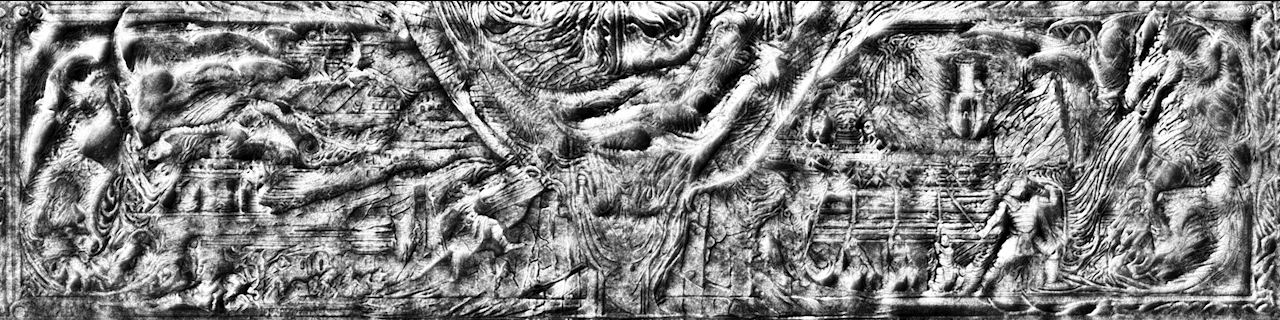

That is a good question. I will let you decide. Here are some comparisons:

Source of normal texture used to generate the height maps below: Iconic's Alduin's Wall Retexture (16K-8K-4K) by IconicDeath

Adobe (around 40 seconds)

This code FFT version (30 seconds)

Adobe (around 20 seconds)

My code (processing time: around 5.5 min)

My code FFT version (processing time: around 9 seconds)

Full source code:

Please check the pipeline project, it is written in Python so the full source code for the script is available by default.

5 comments

But without wanting to diminish your work, I'm afraid I have to say your tool is not the right one for me, without meaning to be derogatory!

I have upscaled the textures from enderal and also used an upscaling model to create the normal maps for the diffuse ones. Unfortunately these normals don't work well with your tool and the effect is way too exaggerated :/

You correctly noticed that upscaling normals can deform them in the process, sharpening of them makes them exaggerated, and using AI introduces geometry hallucinations if they are treated as images during upscale. Some authors generate normals from the simple image manipulation of the source diffuse. In all of those cases using my tool will not give optimal results, and using the AI to generate height map from diffuse has a chance to look better.

Just to be clear, the model for the normal maps is specially trained for this use and generates them from the diffuse ones. In other words, no existing normals are upscaled to avoid the problems mentioned :)

However, it is of course possible that errors may still creep in here and there