File information

Created by

Dwemer DynamicsUploaded by

tylermaisterVirus scan

981 comments

-

LockedStickyFOR ANY ISSUES INSTALLING THE MOD PLEASE POST THEM IN THE DISCORD UNDER THE i-have-a-problem- CHANNEL!

https://discord.gg/NDn9qud2ug -

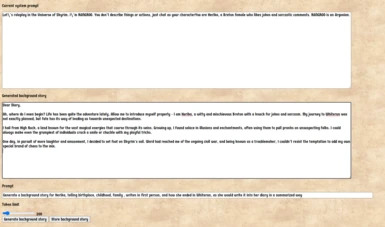

Not being critical it is amazing and one would expect to have to pay money to get it to work as intended since it has a server .I have no problem with that. One person who has Herika on youtube spent 400 dollars to get her to work as he wants and that is also fine. What is murky for me is how much do we need to do to get a barebones function going . I don't see myself spending 400 dollars .So can I get it working for 100 dollars or 200 dollars .It is more than a tad murky. I presume there is more info on the discord. But a more in depth description/outline here would be welcomed.

I have a vague memory of someone several years back saying they could do something similar for a fallout 4 follower they were making but they seem to have abandoned that project. -

The future truly is now, and we’re all getting older by the meme. Is there a way to add cai support for this like this similar minecraft mod? https://www.curseforge.com/minecraft/mc-mods/mcai

-

Please don't be mad but SimpleGateWayer.dll somehow causes a crash when certain bandits fire an arrow

Skyrim SSE v1.6.1170

CrashLoggerSSE v1-15-0-0 Oct 12 2024 11:33:37

Unhandled exception "EXCEPTION_ACCESS_VIOLATION" at 0x7FFDEFFE782C SimpleGateWayer.dll+007782C mov ecx, [rax+0x1C]

Exception Flags: 0x00000000

Number of Parameters: 2

Access Violation: Tried to read memory at 0x000000000104

SYSTEM SPECS:

OS: Microsoft Windows 10 Home v10.0.19045

CPU: AuthenticAMD AMD Ryzen 5 1600 Six-Core Processor

GPU #1: Nvidia GA104 [GeForce RTX 3060 Ti Lite Hash Rate]

GPU #2: Microsoft Basic Render Driver

PHYSICAL MEMORY: 25.22 GB/63.93 GB

GPU MEMORY: 2.16/5.76 GB

Detected Virtual Machine: Microsoft Hyper-V (100%)

Also when I or a follower gets power attacked by a snow bear.

Maybe this is an incompatibility. I'll keep deactivating mods and testing because I did not like having to remove herika.

edit: This must be an incompatibility somewhere because I absolutely did not have any of this crashing before the last batch of mods I added.

edit2: I notice the dll added a file to my overwrite folder. But now I'm using the herika from chim ai follower framework which uses no dll. -

You guys found a way for the server to actually being able to fuckin speak?

I'm checking the prompt from kobold and they generate fine and quickly, but sometimes the distro server isn't able to "speak" until i alt-tab.

And that's a problem cause too much alt-tab makes skyrim to CTD. xD

I'm trying everything i can imagine for herika to "refresh", like summoning her, fusrodah her, molesting her, the soulgaze, the diary, but nothing works until i alt-tab.

And yeah of course, as the quotes "accumulate but are not spoken" it seriously kills the fps. -

Does this work with either Tortoise or Fish Speech tts model? These are the only free, open source, local text to speech system I've seen that rivals or even exceeds Eleven Labs. They do an amazing job of adding inflection and not sounding robotic, and are capable of voice cloning as well. Fish Speech also has API access for around 2 cents per minute, so for $10 you'd get something close to 17 hours of voice -- waaaaay cheaper than EL.

-

How do i enable Voice to text to speak with her without having a chatgpt api key? is there a mod or something to work around that? im new to mods so i really have no idea

-

Hope to add support on Fast_Whisper in Speech to Text section!

Fast-Whisper supports GPU and can be run locally.

https://github.com/SYSTRAN/faster-whisper

-

Is there a way to make this work without using a Microsoft WSL virtual machine, like for those running normal Linux and using wine to launch the game?

Perhaps a docker image could work?

PS. Discord insists on verifying by phone, no thanks. -

Me: "O-R-E-M-O-R space N-H-O-J space E-M space L-L-I-K space T-S-U-M space U-O-Y space E-M-A-G space E-H-T space N-I-W space O-T"

Herika: "REMOR space NHOW space EM KILL TSUM UOY EMAG EHT NIW OT..."

Herika: "Reverse it! TO WIN THE GAME YOU MUST KILL ME - JOHN ROMERO."

The future is now -

what's the guide for XTTSv2?